Now you can try out Shinro puzzles in your Web browser!

There are 120 puzzles there ranging from easy to hard. Can you solve them?

Now you can try out Shinro puzzles in your Web browser!

There are 120 puzzles there ranging from easy to hard. Can you solve them?

Posted in evolutionary computing, toys

I’ve finally finished the web site for my new book of puzzles.

Go there and check out the book. You can even download a free edition!

Posted in computing, evolutionary computing, toys, Uncategorized

Because my new poster gets to go to Istanbul, Turkey for next week’s EvoStar Evolutionary Computation conference without me. I just shipped it off this morning.

Combining puzzle-making with biologically-inspired computer algorithms. Because life isn’t already crazy enough.

Click the above image to see the large version of the poster. Or, you can get a copy of the original PDF. And download the entire 10 page paper if you really want to bore yourself.

We decided not to travel to Istanbul for the conference because of the expense and difficulty in getting there. Alas, I will not be able to experience the surreal juxtaposition of the geeky Mario A.I. competition amidst the majestic ruins of the ancient Roman, Byzantine, and Ottoman empires.

My other posters: Evolutionary art (GECCO 2007), Cracking substitution ciphers (GECCO 2008)

Want to make $500?

All you have to do is make Mario come to life with an artificially intelligent computer program. And he has to survive as many randomly-generated game levels as possible from the Infinite Mario Bros project.

Piece of cake!

A contest entry. From the entrant: “Here’s my attempt at an AI for the Mario AI Competition. You can see the path it plans to go as a red line, which updates when it detects new obstacles at the right screen border. It uses only information visible on screen. I’ve included a slow-motion part in the middle where it gets hairy for Mario. :-)”

Here is the same intelligent agent playing a longer level.

These agents will be unstoppable once they replicate in the Matrix.

Posted in computing, evolutionary computing, tech, toys

You enter a contest. A million dollars is at stake. Forty-one thousand teams from 186 different countries are clamoring for the prize and the glory. You edge into the top 5 contestants, but there is only one prize, and one winner. Second place is the first loser. What do you do?

Team up with the winners, of course.

The Netflix Prize is a competition that is awarding $1,000,000 to whomever can come up with the best improvement to their movie recommendation engine. Their system looks at the massive amounts of movie rental data to try to predict how well users will like other movies. For example, if you like Coraline, you may also like Sweeney Todd. But Netflix’s recommendation engine isn’t great at making predictions, so they decided to offer a bounty to anyone who could come up with a system that has a verifiable 10% improvement to Netflix’s prediction accuracy.

The contest recently ended with two teams jockeying for the prize. During the two and a half years the contest has been active, several individuals and small groups dominated the contest leaderboard;, with competition among 41,000 teams from 186 different countries. The competition became fierce, resulting in coalitions forming. The team “BellKor’s Pragmatic Chaos” formed from the separate teams “BellKor” (part of the Statistics Research Group in AT&T labs), “BigChaos” (a group of folks who specialize in building recommender systems), and “PragmaticTheory” (two Canadian engineers with no formal machine learning or mathematics training). Another conglomerate team, “The Ensemble“, is made up of “Grand Prize Team” (itself a coalition of members combining strategies to win the prize), “Vandelay Industries (another mish-mash of volunteers)”, and “Opera Solutions“.

;

;

At first, it looked like BellKor’s Pragmatic Chaos won. But now it looks like The Ensemble won. Netflix says it will verify and announce the winner in a few weeks.

Who the hell cares? Why is this interesting in the slightest? Ten percent seems so insignificant.

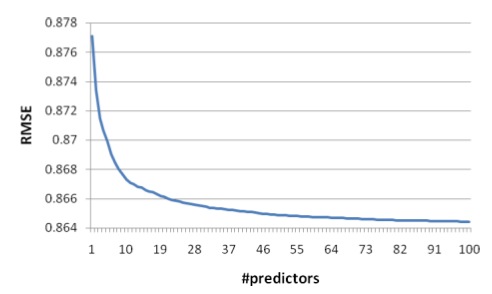

Well, predicting human behavior seems impossible. But this contest has clearly shown that some amount of improvement in prediction of complicated human behavior is indeed possible. And what’s really interesting about the winning teams is that no single machine learning or statistical technique dominates by itself. Each of the winning teams “blends” a lot of different approaches into a single prediction engine.

Artificial neural networks. Singular value decomposition. Restricted Boltzmann Machines. K-Nearest Neighbor Algorithms. Nonnegative matrix factorization. These are all important algorithms and techniques, but they aren’t best in isolation. Blending is key. Even the teams in the contest were blended together.

United we stand.

Each technique has its strengths and weaknesses. Where one predictor fails, another can take up the slack with its own unique take on the problem.

BellKor, in their 2008 paper describing their approach, made the following conclusions about what was important in making predictions:

;

;Yehuda Koren, one of the members of BellKor’s Pragmatic Chaos and a researcher for Yahoo! Israel, went on to publish another paper that goes into more juicy details about their team’s techniques.

I hope to see more contests like this. The KDD Cup is the most similar one that comes to mind. But where is the ginormous cash prize???

Posted in computing, evolutionary computing, math, movies, science, tech, Uncategorized

You’re sitting at your computer, writing your next awesome computer program. You think, “I want to run my new program. But the computer I have is too slow and too boring to run it on.”

You glance over at the petri dish in your biology lab. “What if I could deploy my program as DNA, and the outcome of my program gets expressed as proteins and genes in a real cell?”

Sounds kind of crazy. But Microsoft is researching this.

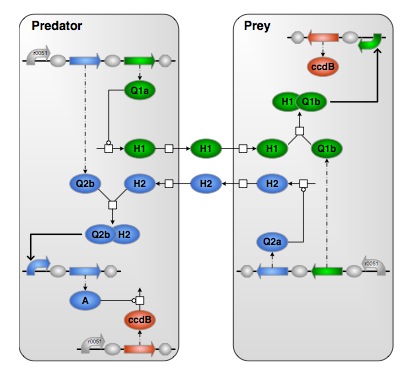

In their paper Towards programming languages for genetic engineering of living cells, Microsoft UK researchers Michael Pedersen and Andrew Phillips have developed a programming language that translates logical concepts into models of biological reactions in simulators. Reactions that have favorable results have the potential to be synthesized into DNA for insertion into real cells, achieving some level of cyborgian awesomeness that we can only just begin to imagine. (Insert obligatory Blue Screen of Death joke here).

More info here. And be sure to check out the full paper here.

|

This impressive augmented reality demo from GE inserts computer-generated 3D objects into live video. First, watch the short video. Then, try it yourself. |

|

Israeli musician “Kutiman” took a big pile of seemingly random YouTube video clips and used them as instruments in his own musical compositions. I could not stop listening to these. My favorites are tracks 2 and 3. His site is overloaded at the time of this post; for now you can see samples here, here, and here. |

|

Can you be an awesome DJ using nothing but a web browser and your computer’s keyboard? Yes you can. |

|

A curious programmer, inspired by Roger Asling’s evolution of the Mona Lisa, asks if the technique could be a good way to compress images. Also take a look at the nice online version of the image evolver he wrote, in which you can set your own target image. |

|

Hilarious Livejournal diary done in the style of Rorschach from the Watchmen comic book series. |

|

The Crisis of Credit, Visualized – An extremely well-produced video describing the credit crisis in simple terms. |

|

instantwatcher.com – “Netflix for impatient people”. A remix of the Netflix site that is “about a quadrillion times easier to browse than Netflix’s own site”. |

|

$timator: How much is your web site worth? |

|

Cursebird. A real time feed of people swearing on Twitter. THANK YOU, INTERNET! |

|

Leapfish. An interesting new meta-search engine with a clean interface. “It’s OK, you’re not cheating on Google.” |

|

Twittersheep. “Enter your twitter username to see a tag cloud from the ‘bios’ of your twitter flock.” |

|

PWN! YouTube. This is a great idea. You just type “pwn” in front of “youtube” in the URL, and voila; instant links for downloading and saving the videos. |

Here are some interesting tidbits of evolutionary computing to honor Darwin’s birthday yesterday:

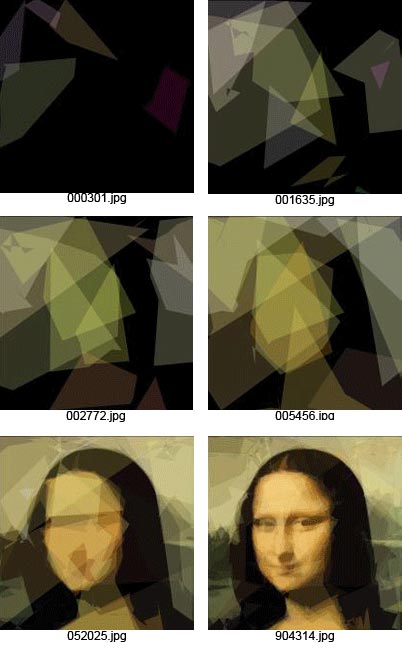

Evolution of Mona Lisa

Roger Alsing’s idea is to start with a random pile of polygons. Random mutations are applied to the polygons. The result is compared to the Mona Lisa source image, and mutations resulting in improvements are kept. Over many generations, the evolved image begins to resemble the Mona Lisa.

This particular application of genetic algorithms is very popular. See what many other people have tried.

Evolectronica

This site evolves music by generating loops randomly from sounds and effects. Listeners to the site’s audio streams rank the results, and the genetic algorithm creates “baby loops” for the listeners to rank.

CSS Evolve

This site shows you variations of a web site’s cascading style sheets. You pick the best results, and their genetic algorithm breeds them to create new styles for the web site.

Posted in computing, evolutionary computing, science, tech, toys